Designing Agentic Systems for the Enterprise: Global Standards, Best Practices, and Energy Industry Applications

Introduction: What Is an Agentic System?

In simple terms, an agentic system is an AI-driven system composed of autonomous “agents” that can perceive information, make decisions, and take actions – often without needing a prompt for every step. Unlike traditional software or even basic AI that only responds to direct inputs, an agentic AI actively engages with its environment and pursues goals on its own linkedin.com. For example, a large language model like GPT-4 becomes agentic when it not only generates answers, but also decides when to query data sources, call APIs, or execute tasks in order to fulfill a high-level objective. In essence, agentic systems involve AI programs that have agency: they can reason, adapt, and even collaborate with humans or other agents to achieve outcomes, rather than just following a fixed script daitodesign.com. This evolution – from passive chatbot to proactive problem-solver – is a pivotal shift in AI. It opens the door to AI “co-workers” that handle complex workflows autonomously, which is why many enterprise innovators are so excited about agentic AI.

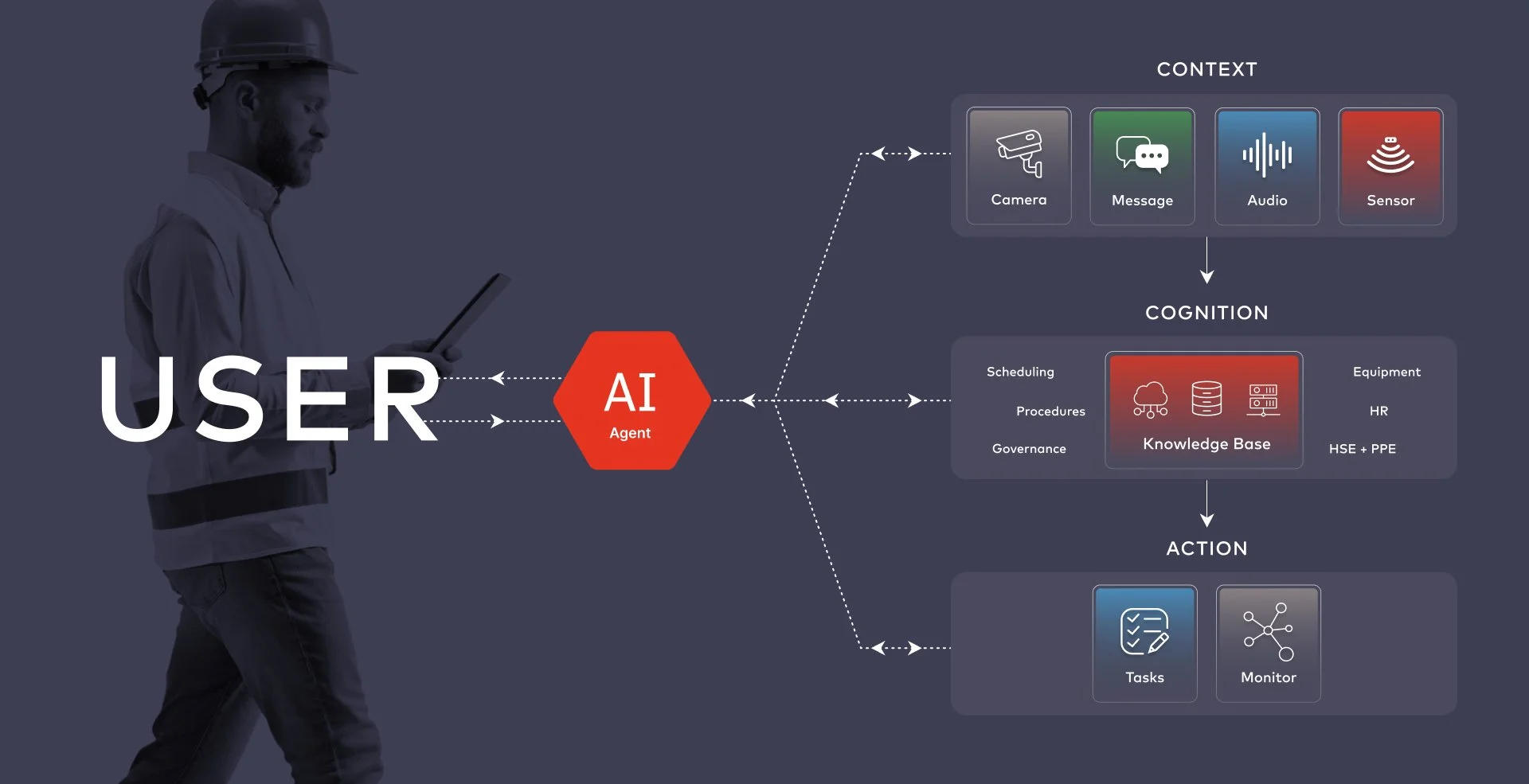

An agentic system concept: the AI agent mediates between the user and various layers of context (sensors, messages, audio, etc.), cognition (knowledge bases, rules, scheduling), and action (executing tasks, monitoring outcomes). This architecture ensures the agent perceives real-world context and acts accordingly, rather than waiting for explicit human instructions.

Today, terms like Agentic AI and AI agents are buzzing from tech blogs to boardrooms. Simply put, an agentic system is what turns a clever AI model into a self-directed actor in your software ecosystem. For newcomers, imagine the difference: a normal program might show you a chart of energy usage, but an agentic system might actually adjust the thermostat or reroute power based on that data – on its own initiative. This autonomy is powerful, but also introduces new challenges in design, architecture, and user experience. In the sections below, we’ll explore a vision for managing these agentic systems at scale, best practices for building them, and why they matter for industries like energy. We’ll also look at how good design and engineering – from UX to devops – can make or break an enterprise-grade agent, and we’ll survey the tools and standards emerging to support this next generation of AI products.

The Vision: A Global Agent Repository and Standard Architecture

As organizations experiment with dozens of AI agents, one big question arises: How do we keep all these agents organized, consistent, and sharable? This is the core idea behind a Global Agent Repository and a Standard Architecture for agentic systems. In an online discussion, one engineer described struggling with “many working micro-agents” and asked how to standardize them for portability and usability reddit.com. The consensus from practitioners is that we need a disciplined approach:

Centralized Repository: Maintain a central registry where each agent’s version, documentation, and capabilities are recorded. This makes it easy for teams to discover existing agents and reuse them, rather than reinventing the wheel for every new project. A centralized repository also lets you manage updates and ensure everyone is using approved agent versions reddit.com. In essence, treat agents as you would microservices or APIs in an enterprise service catalog.

Standard Communication Protocols: Establish a common architecture for how agents talk to other systems (and to each other). For instance, you might decide all agents expose a RESTful API or conform to a particular messaging schema. Using consistent data formats (e.g. JSON payloads for inputs/outputs) and interface contracts ensures that a planning agent built by Team A can seamlessly hand off tasks to a data-fetching agent built by Team B reddit.com. This interoperability is crucial if you envision a “plug-and-play” ecosystem of agents.

Containerization and Portability: To make deployment and scaling easier, package agents in standardized containers or runtime environments. Docker images or similar containerization help sandbox each agent with its required libraries and tools, so it can run on any infrastructure. This approach greatly aids portability – whether the agent is moved from a developer’s laptop to a cloud cluster or shared with a partner organization reddit.com.

Unified Lifecycle and Governance: The vision of a global agent repository also comes with governance practices. Define guidelines for developing and deploying agents – covering coding standards, testing, naming conventions, error handling, logging, and security. Regularly review agents for compliance with these standards reddit.com. By enforcing an architectural discipline, you prevent an explosion of “rogue” scripts. As one AI engineer cautioned, without a unified architecture you’ll “end up with a pile of smart scripts, not a system”. In other words, portability and usability come from constraint: agreeing on some rules upfront will save countless hours later.

Lightweight Orchestration: Another rationale for a standard architecture is to support a shared agent runtime or orchestration layer that all agents plug into. Think of this like an operating system for your agents that handles common concerns such as message passing, retries on failure, load balancing, and security policies reddit.com. If every agent doesn’t have to re-implement these low-level behaviors, developers can focus on the unique logic of each agent. A lightweight orchestration layer can also mediate communication between agents, making it easier to compose complex workflows from simple building blocks.

In summary, the global repository and standard architecture vision is about treating agents “less like isolated tools and more like composable services” with defined contracts and infrastructure reddit.com. Just as enterprises today manage micro-services through API gateways, service catalogs, and DevOps pipelines, we’ll need similar structures for AI agents. The end goal is agility with control: you want teams to rapidly deploy new agents for specific needs, but within a framework that ensures those agents can interoperate, be audited, and be trusted across the organization.

Best Practices for Building Agentic Systems

How can we actually implement the above vision in day-to-day development? Let’s expand on some best practices that have emerged for building robust agentic systems. These include standardized naming, error handling, logging, modularity, and more – the bread-and-butter of good software engineering, adapted to the world of AI agents:

Consistent Naming Conventions: Adopting a clear naming scheme for agents and their components is key in a large system. Agents should have descriptive names that reflect their role (e.g. “PricingOptimizerAgent” or “GridMonitorAgent”) and possibly version indicators. Consistent naming makes it easier to reason about orchestrations and to search the agent repository. It’s also wise to standardize how agents refer to common concepts (for example, if multiple agents use a shared memory or context, use the same field names everywhere). A Reddit contributor suggested that when using frameworks like LangChain or CrewAI, teams should enforce naming conventions for agent roles and memory structures across the board reddit.com. This prevents confusion and collisions when agents are composed into larger workflows.

Robust Error Handling & Recovery: Agentic systems must expect the unexpected. Since agents operate with a degree of autonomy, they should be built to handle errors gracefully and even recover or retry if possible. Best practices include defining a standard error format (so all agents report failures in a uniform way) and implementing retry logic with backoff for transient issues (e.g. if an API call fails). One strategy is to wrap each agent’s core logic in a common error-handling decorator or middleware that catches exceptions and translates them into a structured error output or invokes fallback behaviors reddit.com. For example, an agent that fails to get a response from a language model might return a “cannot complete request” result rather than simply crashing. Consistency here helps when different agents are chained together – it ensures a downstream agent isn’t left hanging or, worse, acting on partial results. In mission-critical scenarios, agents might also escalate to human operators when certain errors occur (more on human-in-the-loop design later).

Unified Logging and Observability: Effective logging is the lifeblood of debugging complex AI workflows. Every agent should emit logs in a standard format (including timestamps, agent name, correlation IDs for traceability, etc.) so that you can trace how a request flows through various agents. Logging should capture key decisions and actions – for instance, what inputs were received, what tool was invoked, what result came back from an API call, and how the agent’s internal reasoning led to a certain output. By using a shared logging schema and possibly a centralized log aggregator, operators can monitor the whole multi-agent system in one view. Community best practices suggest starting with “unified logging [and] consistent I/O contracts” from day one, as it will “save you weeks later” when tracking down issues reddit.com. New tooling is emerging here: for example, the LangChain framework recently introduced LangSmith to help trace agent prompts and actions, reflecting the need for better observability. In short, log everything an agent does (within privacy/security bounds) – you’ll thank yourself when it’s time to explain or troubleshoot an agent’s behavior.

Modular, Composable Design: A hallmark of agentic system design is modularity. Each agent should ideally do one thing well (akin to the microservice philosophy). This makes it easier to update or replace agents without breaking the whole system. Design agents around clear input/output contracts – for instance, define a JSON schema for what data an agent expects and what it will return reddit.com. This acts like an OpenAPI spec but for your AI agents, enabling different components to mix and match. With modular agents, you can compose higher-level workflows by chaining or orchestrating these building blocks. One recommendation is composition over spaghetti: rather than having agents call each other in hard-coded, ad-hoc ways, use an orchestration graph or workflow engine so that each agent is a reusable node in a directed acyclic graph (DAG) of tasks reddit.com. This could be as simple as a Python script orchestrating calls in sequence or as sophisticated as a dedicated orchestration platform. The key is that the flow is explicit and maintainable. Modular design also implies agents should be stateless where possible (relying on shared context or databases for state), which makes scaling and replacing them easier.

Standardized Metadata & Documentation: To support the global repository concept, embed rich metadata with each agent. This includes not just the functional API schema, but also human-readable descriptions of the agent’s purpose, its author/owner, version history, and any special requirements (like “needs GPU” or “calls external service X”). Document any assumptions the agent makes and the “contract” it upholds. For example: “ForecastingAgent – Given past sales data, predicts next week’s sales. Assumes input data is CSV with columns A, B, C. Outputs JSON with fields X, Y, Z.” Such documentation can live in a repository README or a central registry UI. Having this standard info for every agent is invaluable when dozens of agents exist – it’s essentially your catalog for discovery. Some teams even maintain a lightweight Agent Registry in YAML or a database, which can be queried to find an agent that, say, “calculates pricing” or “monitors sensors” reddit.com. This registry approach accelerates development (reuse beats rebuild) and helps with governance (you can quickly audit which agents have certain privileges or use certain data sources).

Consistent Deployment & DevOps: Treat agents as first-class deliverables in your dev pipeline. Use infrastructure-as-code or scripts to deploy agents in a uniform way (for example, each agent has a CI/CD job that builds its container and registers it with the repository). Implement monitoring for each agent’s health (CPU/memory usage, response latency, error rates, etc.) just as you would for a microservice. Over time, having automated tests for agent behaviors is important too – especially regression tests to catch if a new model update changes an agent’s outputs undesirably. The more you can automate and standardize the devops of agents, the more reliably you can scale up their usage across projects. Some organizations are even creating internal “Agent Platforms” that abstract away the hosting details and give developers a self-service way to deploy new agents while automatically applying the standard architecture and logging.

By following these best practices, teams create agentic systems that are maintainable and scalable, not just clever demos. A Reddit user nicely summarized their approach as a five-part checklist: define a standard interface for every agent, wrap agents in a common abstraction for consistency, keep a registry of agents, enforce naming/memory conventions, and prefer structured composition of agents (like DAGs) over ad-hoc chaining reddit.com reddit.com. These habits turn a collection of AI scripts into a robust system.

Applying Agentic Principles in the Energy Industry

To make these ideas more concrete, let’s look at how agentic systems can drive large-scale operations and digital transformation in the energy sector – from utilities to renewables to energy trading. The energy industry is ripe for AI-driven automation due to its vast, complex, and real-time nature (think smart grids, power plants, and energy markets). Here’s how agentic systems can help:

Utility Grid Operations (Smart Grids): Power utilities are deploying autonomous agents to manage grid stability and optimize distribution. For example, an agentic system can monitor grid health continuously and reroute power when it detects a fault or overload, effectively creating a self-healing grid. AI agents can anticipate equipment failures or line outages and isolate problems before they cascade xenonstack.com. Additionally, agents can dynamically balance electricity load between traditional power plants and renewable sources. By analyzing demand in real time, an agent might direct more solar energy to the grid during a sunny interval and dial down gas turbine output, maximizing efficiency and reducing waste xenonstack.com. These tasks, once done by control room operators manually, can be handled faster and more precisely by agentic automation. The result is a more resilient grid with fewer blackouts and better response to surges or drops in supply.

Renewables Integration & Management: The rise of wind and solar brings variability that agentic systems are well-suited to handle. AI agents in a renewable energy context can forecast production (e.g. predicting tomorrow’s solar output from weather data) and make real-time adjustments. For instance, a wind farm could use an agent to predict wind energy generation hours ahead xenonstack.com and coordinate with battery storage agents to charge or discharge at optimal times. An energy storage agent might automatically decide when to store excess solar power and when to release it to the grid, based on price signals and demand forecasts xenonstack.com. In effect, these agents act as a team: one watches the weather and generation potential, another manages the batteries, and together they smooth out supply fluctuations. Such agentic control systems help utilities maintain stability even as renewables supply a larger share of power. They also enable climate adaptation strategies – for example, agents can curtail or adjust operations in response to extreme weather events (like preemptively protecting equipment during a hurricane, then rapidly restoring service after). By automating these decisions, energy companies can operate closer to the margins of capacity and still reliably meet demand, unlocking more value from renewable assets.

Energy Trading and Markets: Energy markets are fast-moving and complex – perfect terrain for autonomous agents. In large-scale trading operations (whether utilities managing contracts or traders in energy firms), agentic systems can act as tireless, lightning-fast analysts and negotiators. For example, an energy trading agent can monitor market prices, weather forecasts, and grid conditions, and execute buy/sell orders within milliseconds to arbitrage opportunities xenonstack.com. Unlike a human trader, an AI agent can process gigabytes of data and react 24/7, ensuring optimal pricing decisions around the clock. Beyond just speed, agents can facilitate new market mechanisms: imagine a decentralized trading network where household solar batteries automatically trade energy with each other. An agent could represent each home, negotiating and executing peer-to-peer energy trades via smart contracts xenonstack.com. This is already starting to happen in some regions – essentially creating “energy marketplaces” run by AI. By standardizing these agents with proper oversight (to avoid, say, excessive risk-taking), energy companies can drastically improve market efficiency and even offer new products (like smart tariffs that adjust rates dynamically through agent-based negotiation).

Predictive Maintenance & Field Operations: Large utilities and renewable operators have thousands of assets (transformers, turbines, solar panels) spread over wide areas. Agentic systems can assist in maintenance by continuously analyzing sensor data from equipment and flagging anomalies. For instance, an AI agent monitoring wind turbine vibrations might detect a subtle change indicating a mechanical issue and schedule a maintenance work order before a breakdown occurs xenonstack.com. Combined with drones or IoT sensors, agents can prioritize repairs, order replacement parts, and dispatch field crews efficiently – all steps towards a more autonomous maintenance workflow. In practice, this might look like a “virtual operator” agent that oversees dozens of power plants’ telemetry and only alerts human engineers when necessary, handling routine adjustments on its own. For energy companies, this means fewer costly outages and a shift from reactive fixes to preventive care, which is both safer and more cost-effective.

These examples barely scratch the surface, but they illustrate a pattern: agentic systems excel in environments that are data-rich, complex, and require rapid decisions – exactly the scenario in modern energy operations. Pioneering projects have shown that agentic AI can optimize grid efficiency, integrate renewables smoothly, lower trading costs, and improve reliability xenonstack.com xenonstack.com. In short, for energy and utilities firms undergoing digital transformation, autonomous agents aren’t science fiction; they’re becoming a competitive advantage.

Of course, deploying agents in such high-stakes settings demands caution. Energy is a critical infrastructure – mistakes can be costly or dangerous. That’s why the best practices we discussed (standard architecture, error handling, etc.) and the UX considerations we’ll cover next are so important. They ensure that even as we hand more control to AI agents, we maintain transparency, safety, and human oversight where needed.

UX Design: Building Trust and Transparency in Agentic Systems

No matter how advanced an agentic system is, its success in an enterprise will often hinge on user experience (UX). This might sound surprising – aren’t these autonomous agents doing their own thing under the hood? But in reality, human operators, engineers, or other end-users will interact with agentic systems at various points: configuring them, supervising their operations, reviewing their decisions, and occasionally overriding or debugging them. A well-designed UX can make these interactions intuitive and instill confidence, whereas poor UX can lead to confusion and mistrust (“What on earth is the AI doing now?”). Here are some critical UX principles and patterns for agentic systems:

Make the “Invisible Thinking” Visible: One challenge with AI agents is that a lot of their work happens behind the scenes (e.g., an agent reasoning over data or making a decision). To build operator trust, the system’s UI should surface the agent’s mental model and rationale in a human-understandable way. For instance, if an agent decides to purchase energy on the market, the interface might show a brief explanation: “Agent chose to buy 50MW because price dropped below $X and a supply shortfall is forecasted.” Techniques like intent visualization or confidence indicators can be very effective. Daito Design refers to this as Intent Signaling: giving clear visual or textual cues about why an agent is recommending something daitodesign.com. This could include showing which data points influenced the decision, or a simple highlight like “High confidence” vs “Low confidence” when the agent suggests an action. By illuminating the agent’s internal logic, we help users feel more in control and able to trust the agent’s autonomy.

Design for an Autonomy Spectrum (Graduated Control): Not every task should be fully autonomous, and not every moment requires human approval. The best agentic systems are designed with an autonomy gradient – a spectrum from fully manual to fully automatic – that can adjust based on context daitodesign.com. For example, routine tasks (like balancing voltage in a transformer) might be handled 100% by the agent, whereas unusual or high-impact decisions (like shutting down a substation) might require human confirmation. UX can support this by providing modes or settings that let users dial the level of autonomy up or down. In practice, this might appear as an “automatic vs advisory mode” toggle for an agent, or a policy that certain recommendations always come to a human for sign-off. By acknowledging a spectrum of autonomy, you also alleviate the “all-or-nothing” fear – users know they can intervene if needed, which paradoxically makes them more comfortable letting the agent run on its own. As a designer or product owner, consider where on that spectrum each agent operates and communicate that clearly in the UI (for instance, labels or color-coding for actions the agent took autonomously vs actions waiting on human input).

Mission-Control Style Interfaces: Traditional dashboards may fall short when overseeing a swarm of intelligent agents. Instead, UX professionals are finding they need to create mission control-style interfaces, especially for complex industrial or enterprise agent networks daitodesign.com. What does this mean? Think of a control center with live status feeds, alerts, and the ability to drill down on any component – but streamlined for software. In an agentic system UI, a mission-control interface might show all active agents, their current state (idle, running, needs attention), and any flagged anomalies. It should enable an operator to step in when something goes off-nominal. For example, if an agent encounters a scenario it’s not confident about, it could raise a visual alarm and the human operator can then dive in, inspect the logs or agent’s reasoning, and decide whether to approve or adjust the action. This design pattern emphasizes oversight with minimal intervention: most of the time the system runs itself, but when the human needs to jump in, the tools are at their fingertips. At scale, it might even look like a network graph of agents with data flows, where you can click on any node (agent) to inspect its status and recent decisions. By moving beyond static charts to interactive, real-time control panels, we give operators a sense of partnership with the AI: they remain the ultimate high-level supervisor while the agents handle the minutiae.

Facilitating Trust and Educating Users: Gaining operator trust isn’t just about showing what the AI is doing, but also setting expectations. UX can help educate users about an agent’s capabilities and limitations. When introducing an agentic system, consider guided walkthroughs or tooltips that explain, for instance, “Agent X monitors these data sources and will alert you if Y condition is met.” Make it clear when the agent will act on its own and when it will ask for input. This manages the mental model the human has of the agent. Also, provide easy escape hatches: if a user disagrees with an agent’s action, they should be able to undo it or override it without feeling they’re “fighting” the system. Simple UI affordances like an “Override” button or the ability to set certain rules (e.g. “never sell energy below $Price”) can build a sense of safety. Ultimately, trust comes from transparency + control. As UX designers, we need to give transparency (through explanations, audit trails, visualizations) and control (through adjustable settings, override options, and confirmations for critical actions).

Patterns for Debugging & Iteration: When things do go wrong (and inevitably, something will), the UX should assist the user in diagnosing and correcting the issue. This is part of the broader concept of Observability, but from a UX standpoint, it might mean providing a clear error report or log view in the interface. If an agent fails to complete a task, the user shouldn’t see just a generic “Error” – the interface should show, for example, “OrderPlacementAgent failed to call API – authentication expired.” That level of detail empowers the user to fix the problem or inform IT. Some advanced agent UIs even allow you to replay or simulate agent decisions with tweaks – for instance, a testing console where a user can run an agent on a past scenario to see why it behaved a certain way, aiding in debugging or fine-tuning. These kinds of UX features cross into developer-tooling territory, but for enterprise systems it’s often the same personas (operators, engineers) interacting with the UI. So, investing in a good UX for debugging (maybe an event timeline, a step-by-step visualization of the agent’s thought process, etc.) will pay dividends in faster issue resolution and user confidence.

In summary, UX is the glue that connects human intelligence with artificial agency. A thoughtful UX ensures that agentic systems are not black boxes sitting in a corner, but rather collaborative tools that people feel comfortable working with. It brings much-needed transparency to AI decisions and provides mechanisms for oversight and intervention. When users can see why an agent did something and have a clear way to influence or correct it, they’re far more likely to embrace these systems. Conversely, if the AI’s actions are opaque or unpredictable, trust evaporates and adoption stalls. As one design agency put it, agentic design requires rethinking interfaces: moving from traditional dashboards to more interactive, conversational, and control-oriented experiences tailored to autonomous agents daitodesign.com daitodesign.com. For enterprise product/design professionals, this is a fascinating new frontier – melding AI behavior with human-centered design so that the two work in harmony.

Scaling Up: From One Agent to a Company-Wide Platform

It’s one thing to build a single AI agent that performs well in a pilot project. It’s another to have a hundred agents deployed across an enterprise, all contributing to business goals in a coordinated way. Scaling agentic systems across large enterprise teams – involving data scientists, product designers, software engineers, IT, and more – requires careful planning. It’s as much an organizational challenge as a technical one. Here are key considerations for scaling and aligning with broader platform strategies:

Cross-Functional Collaboration and Governance: Building agentic systems at scale is inherently a cross-disciplinary effort. Data scientists might craft the AI logic or models behind an agent, engineers handle integration and infrastructure, and product designers/owners ensure it meets user needs. To succeed, these groups need to work from a shared playbook. Establish cross-functional oversight from the start – for example, create a committee or working group that includes representatives from engineering, data science, UX, and importantly, compliance/security. This group can set the standards (like the ones we discussed) and guide strategy. DataRobot’s enterprise AI guidelines advise involving legal and business stakeholders as well, to ensure alignment with company policies and objectives datarobot.com. Think of it like an AI governance board that regularly reviews what agents are being developed, how they interact, and any risks or ethical considerations. This oversight body helps break down silos; instead of each team doing its own AI project in isolation, there’s a coordinated approach that fits the company’s vision.

Platform Approach (AgentOps): Many enterprises are now treating “AI agent platforms” as a core part of their tech stack, analogous to how they treated mobile or cloud platforms. The idea is to provide central services and infrastructure that all teams can leverage when creating agents, so they don’t have to solve the same problems repeatedly. For example, a company-wide agent platform might offer: a library of common agent components (for logging, error handling, etc.), an internal API or SDK to deploy agents on the company’s cloud with one click, and a monitoring dashboard that shows all agent activities across departments. By investing in such a platform, you empower dozens of teams to build agents that plug into the same ecosystem. This also makes scaling easier – new agents benefit from existing security, scalability, and compliance measures. We’re even seeing vendor solutions in this space: for instance, UiPath’s recent “Maestro” orchestration layer is explicitly designed to integrate AI agents, robots, and people in enterprise workflows, providing the centralized oversight needed to safely scale AI-powered agents across systems and teams uipath.com. Enterprises can adopt or mirror these ideas internally. Essentially, treat agent development like a product – provide internal docs, templates, and tooling (what some call AgentOps, by analogy to DevOps/MLOps) to standardize the lifecycle from development to deployment to monitoring.

Alignment with Business Strategy: Scaling agentic systems isn’t just an IT initiative; it should serve the company’s strategic goals. This means identifying where agents can have the most impact (e.g., automating a costly manual process, enabling a new data-driven service, etc.) and prioritizing those use cases. It also means ensuring that as agents take on more functions, the business processes and teams adjust accordingly (organizational change management). A pitfall to avoid is the proliferation of “cool AI demos” that never translate into value because they aren’t integrated into actual workflows. By aligning agent development with the enterprise’s platform strategy, you make sure, for instance, that your agents are feeding into the same data lake or CRM system that other tools use, so their outputs are immediately useful. One practical approach is to embed AI agents into existing software platforms the company uses. If your company has a central data platform or a workflow system, integrate your agents there rather than creating standalone apps. This way, agents augment and automate parts of established processes, and users don’t have to go out of their way to interact with them.

Scaling Teams and Knowledge: As you move from one agent to many, invest in training and knowledge sharing. Upskill your teams on this new paradigm – data scientists need to learn more about software engineering for agents, and engineers need to learn how to work with AI behaviors. Host internal workshops or create sandboxes where teams can play with frameworks like AutoGPT or LangChain on company data (safely) to spark ideas. Encourage a culture of sharing successes and failures with agentic systems. For example, if the customer support team built a useful AI agent to route inquiries, showcase that to other departments (maybe that agent can be reused or adapted). Some companies establish an AI Center of Excellence which acts as a hub for such knowledge and even provides consulting internally for teams that want to implement agents. The goal is to avoid each team struggling up the learning curve alone. When scaled, agentic systems will likely touch every part of the business – so everyone should start developing a familiarity with how to design, use, and govern them.

Safety, Security, and Compliance at Scale: Enterprises in regulated industries (and really any large company) must pay special attention to compliance when scaling agents. What might be a minor issue in a small pilot (like an agent occasionally making an odd comment) could be a major problem when scaled (imagine an agent inadvertently breaching data privacy in an automated process). So, involve your security, risk, and compliance officers in the scaling process. Establish guardrails: for example, set rules on what data agents are allowed to access or what decisions they are allowed to make autonomously. Implement audit logging for agent decisions so that you have a record for compliance purposes (who/what took an action, based on what inputs). At scale, you might even require that certain agent actions go through an approval workflow in sensitive areas. By baking in these considerations, you avoid nasty surprises later. As one enterprise AI report noted, early AI projects can create “operational blind spots that stall progress… if left unaddressed” datarobot.com – meaning issues of data integration, testing, and oversight must be tackled to move from proof-of-concept to production. The same applies to agentic AI. Make sure your scale-up plan includes robust governance, monitoring, and guardrails – these aren’t optional; they’re critical to long-term success datarobot.com datarobot.com.

Finally, a note on culture: scaling agentic systems will likely change how people work. Roles may shift – some routine tasks handled by agents, while humans focus on supervision and creative problem-solving. Be transparent with your teams about these changes. Get buy-in by highlighting how agents can eliminate drudgery or improve outcomes, not replace the human touch entirely. When large enterprise teams rally around the idea that AI agents can be teammates rather than threats, adoption flourishes. We’ve seen companies where, after initial skepticism, employees start requesting more agentic tools once they see the benefits (e.g., an analyst might say “Could we get an agent to auto-generate this weekly report? It would save me so much time.”). That kind of bottom-up demand is a good indicator that your scaling strategy is on the right track.

Tools of the Trade and Gaps to Close

The surge in interest around agentic systems has given rise to a variety of tools and frameworks aiming to make development easier. If you’re diving into this space in 2025, you’ll encounter names like LangChain, AutoGPT, BabyAGI, Streamlit Agents, Dust, CrewAI, MetaGPT, and many more. Each comes at the problem from a slightly different angle – some focusing on chaining language model calls, others on orchestrating multiple agents with distinct “roles” or building entire agent ecosystems. Let’s briefly survey a few, and then examine where current tooling still falls short for enterprise needs:

LangChain: Perhaps the most popular library for agent development, LangChain provides a framework to connect LLMs (like GPT-4) to external tools and data, and to manage conversational chains. It offers abstractions for things like tools (APIs the agent can call), memory (storing context between calls), and multi-step reasoning. LangChain has been fantastic for quick prototypes – you can, for example, spin up an agent that answers questions by searching a document database in just a few lines of code. It also introduced the concept of chains and agents (agents being more autonomous sequences that decide which chain or tool to use next). However, many have noted LangChain is not a plug-and-play production solution. You often have to build additional layers for things like reliable citations, user interaction, or error handling. One critique put it bluntly: LangChain lets you demo something in minutes, but when your boss asks “How did it get that answer?”, you realize you must implement your own transparency and citation logic from scratch medium.com. That highlights a gap: out-of-the-box, frameworks like LangChain don’t fully address enterprise needs for traceability and verification – developers must extend them.

AutoGPT and “Autonomous Agents”: AutoGPT was an early open-source experiment that captured the internet’s imagination by chaining GPT calls together to attempt goal-driven behavior. Give AutoGPT a goal (like “research and write a report on X”) and it would spawn sub-tasks for itself, search the web, generate text, evaluate if done, and so on. It and similar projects (BabyAGI, AgentGPT) demonstrated what an autonomous agent might do, but they were often unpredictable and inefficient in practice. Still, they opened eyes to the possibility of AI agents that could iteratively refine their own plans. In enterprise contexts, the ideas from AutoGPT have influenced tools that try to automate multi-step business processes. For example, you might see a SalesGPT that can iterate through contacting a lead, getting info, updating CRM, following up, etc. The current gap is that these autonomous agents can be erratic and lack guardrails. They may get stuck in loops or pursue irrelevant tangents if not carefully guided. So while AutoGPT-like tools are exciting, enterprises have been cautious – they often require significant prompt engineering and oversight to be useful. The opportunity (and challenge) is to harness their ability to break complex tasks into smaller ones, without letting them “run wild” beyond what’s acceptable. We’re likely to see more robust versions (possibly from big vendors) that add business logic constraints to these free-roaming agents.

Multi-Agent Orchestration Frameworks (CrewAI, MetaGPT, etc.): Beyond single agents, frameworks like CrewAI are built to manage multiple agents working together. CrewAI (an open-source project by João Moura) lets you define a team of agents, each with a role (e.g., a “Writer” agent and a “Critic” agent working together on a text). It handles the messaging between these agents and coordination logic ibm.com. Similarly, MetaGPT (a concept out of an academic project) set up an entire “company” of GPT-based agents with roles like CEO, CTO, engineers, who communicate to complete a project collaboratively. These frameworks are fascinating as they mirror human organizational structures – you could imagine in an enterprise setting a “virtual team” of agents, one querying data, another analyzing, another drafting a report, all orchestrated by a lead agent. Right now, these are mostly experimental. The enterprise gap here is figuring out debugging and reliability: when multiple agents are chatting among themselves, it can be hard to follow the chain of thought or ensure they don’t collectively drift into error. There’s also a performance concern – many agents means many API calls (and costs). However, for complex tasks, multi-agent systems could prove very powerful, and tools like CrewAI give a taste of that potential in a more controlled way (it’s “completely independent of LangChain” and built for speed and minimal overhead github.com.

Enterprise AI Platforms and Custom Solutions: Big players are entering the fray too. For example, Salesforce is integrating agentic concepts into its platform (“Agentforce”), and UiPath has launched an enterprise agent platform focusing on orchestration and governance uipath.com uipath.com. These solutions aim to combine AI agents with existing automation (RPA) and workflow tools, addressing enterprise concerns around security and compliance. They often come with visual designers to build agent workflows and pre-built connectors to business applications. The advantage for enterprises is the familiar ecosystem and support. The disadvantage might be vendor lock-in or less flexibility compared to open-source tools. We also see custom in-house frameworks at many companies – essentially homegrown agent platforms built on top of APIs like OpenAI’s, tailored to their specific needs and using their proprietary data.

Despite this rich landscape of tools, gaps remain before agentic systems truly become a mainstream, plug-and-play part of enterprise tech. Some of the notable gaps and challenges are:

Interoperability and Standards: Right now, each framework has its own way of defining and running agents. There’s no universally agreed “protocol” for how an agent exposes itself or communicates. This is akin to the early days of APIs before REST became standard – today, we lack a common “language” for agents. However, efforts are underway. For instance, Anthropic introduced the Model Context Protocol (MCP) as an open standard for connecting AI assistants to tools and data, and Google proposed an Agent-to-Agent (A2A) protocol for agents to communicate and delegate tasks to each other medium.com medium.com. A2A envisions a world where an agent can advertise its capabilities in a standardized format (a bit like a service registry for agents) and different vendors’ agents could interoperate over a network. These standards are still in flux, but enterprise adopters should keep an eye on them. In the near future, we might see something like “the HTTP for agents” – a uniform way to expose an agent’s API and negotiate interactions. Until that solidifies, integrating tools from different ecosystems (say, a LangChain agent with a UiPath agent) might require custom glue code.

Reliability and Consistency: Many current agent tools are optimized for flexibility and capability rather than predictability. In enterprise settings, you often care less about the AI doing something super creative, and more about it doing the right thing consistently and handling edge cases. Ensuring reliability means more testing and validation of agents, which tooling doesn’t fully support yet. For example, how do you unit test a chain of prompts? How do you simulate an agent’s behavior under different scenarios systematically? These are active areas of development. LangChain and others have started adding tracing and evaluation modules, but it’s not yet as robust as traditional software testing. The gap is closing, but until we have strong validation frameworks, many enterprises will be tentative to let agents work completely autonomously on critical processes. It’s telling that a DataRobot article pointed out the difficulty in understanding how decisions are made or pinpointing errors without “model tracking or auditability” in place datarobot.com – in other words, lack of traceability can stall adoption. Enterprises need better tools for end-to-end visibility into agent decisions (which ties into our earlier point on logging and UX).

Tool Integration and Fragmentation: Paradoxically, while agents are supposed to integrate tools, the agent development toolset itself is fragmented. One company might use one approach for an AI assistant in customer service and a completely different approach for an AI ops agent in DevOps, leading to duplicate effort and inconsistent outcomes. The nascent agentic AI landscape makes it hard to choose the right tooling, and committing to one too early could create vendor lock-in or technical debt datarobot.com. This is a gap in the market – a clear winner or a more unified framework hasn’t fully emerged (yet). For now, enterprises often have to support multiple frameworks or bet on one and hope it covers all their use cases. Open standards (as mentioned) could alleviate this by allowing different frameworks to talk, but we’re in the early days. The practical advice is to stay flexible: design your agent logic in a modular way so that if you need to swap out the underlying framework, it’s possible. Keep an eye on projects aiming to be more meta-frameworks that can interface with many others.

Security, Compliance, and Policy Enforcement: Current open-source agent tools typically do not have built-in notions of security policy, user authentication, data access control, etc. They’ll happily let an agent attempt whatever you prompt it to. In an enterprise, you need to enforce rules like “This agent can only read data from database X and nothing else” or “Agents cannot call external APIs that aren’t whitelisted.” Implementing those controls is currently a manual effort – either wrapping the agent environment or building custom middleware. Enterprise platforms (like UiPath’s) are beginning to address this by integrating with identity management and providing guardrails at the platform level uipath.com. Still, for many using generic tools, establishing security boundaries is a gap – one that absolutely must be filled before wider deployment. No one wants an AI agent accidentally emailing out confidential data or making trades above its authorization. Until frameworks natively support role-based access, encryption, and compliance logging, enterprises will have to bolt these on themselves. This is an area likely to see rapid improvement, as trust is a big blocker to adoption.

Cost and Performance Optimization: Running agentic systems, especially those backed by large language models, can be expensive (API calls to GPT-4 aren’t cheap at scale) and sometimes slow (latency as the agent reasons or calls multiple services). Current tools don’t always optimize this – they prioritize capability. The gap here is better resource management: caching results when appropriate, batching calls, using cheaper/smaller models when a task doesn’t need the big guns, etc. Enterprises will want tools that intelligently route tasks – e.g., a quick classification might use an in-house model, whereas a complex reasoning task uses a powerful external API, all abstracted from the developer. Efforts like model distillation, on-prem model hosting, and adaptive throttling need to be woven into agent frameworks to control costs and performance at scale. Otherwise, a naive agent deployment could rack up API bills or slow down user-facing processes. This is not a trivial challenge, but as agent usage grows, expect cost-management features to become a must-have in enterprise agent ops (similar to how cloud management platforms arose when cloud usage exploded).

Despite these gaps, the trajectory is clear: the ecosystem is rapidly maturing. The fact that major enterprise software players and a vibrant open-source community are both pushing forward means solutions will evolve quickly. As a product or design leader in AI, you should actively monitor these developments, pilot the tools that seem promising (in safe, controlled experiments), and even contribute to the conversation around standards. We are essentially witnessing the formation of a new layer of the software stack – the “agentic” layer – and those who shape it now will set the patterns for years to come.

Conclusion:

Agentic systems are transitioning from an intriguing concept to a practical reality in the enterprise. By defining clear architectures, following best practices in development, focusing on UX for trust and transparency, and addressing organizational and tooling challenges, we can unlock their full potential. The vision of a Global Agent Repository and standard is about making this technology scalable and manageable – so that any team in a company can safely deploy an AI agent the way they would spin up a microservice, and have it instantly integrate into the collective intelligence of the organization.

For industries like energy, these systems offer not just efficiency gains but a chance to reimagine operations – autonomous grids, proactive maintenance, intelligent trading – all coordinated by fleets of AI agents working in concert. But regardless of industry, the core principles remain: design agents with purpose and discipline, empower users to understand and guide them, and create an ecosystem where agents are as governed and reliable as any other enterprise application.

We are still in the early days. Today’s tools and standards will likely evolve, and new best practices will emerge as we learn from real deployments. It’s an exciting journey that merges cutting-edge AI with time-tested engineering and design wisdom. By staying grounded in solid architecture and empathetic UX, we can avoid the hype traps and build agentic systems that truly deliver value. The companies that get this right will not only improve their processes but could reshape how work gets done – with human talent and artificial agents each doing what they do best. The future of enterprise AI is agentic, and it’s being built right now. Are you ready to be a part of it?

Sources:

Reddit – “Global agent repository and standard architecture” discussion (Best practices for standardizing and managing AI agents) reddit.com reddit.com.

Reddit – Advice on treating agents as composable services with standard contracts and shared runtime reddit.com.

Reddit – Practitioner’s checklist for agent standardization (JSON schemas, registry, naming conventions, DAG composition) reddit.com reddit.com.

XenonStack Blog – “Agentic AI in Energy Sector” (Examples of autonomous agents in grid management, trading, renewables) xenonstack.com.

Daito Design Blog – “Rethinking UX for Agentic Workflows” (UX patterns for agentic systems: autonomy spectrum, intent transparency, mission-control UI) daitodesign.com.

UiPath Press Release – Enterprise Agentic Automation Platform (Need for orchestration and oversight to scale AI agents in business-critical workflows) uipath.com.

DataRobot Blog – “The enterprise path to agentic AI” (Importance of cross-functional oversight, governance, and the challenges of tool fragmentation and traceability in scaling agents) datarobot.com.

Medium – “LangChain is NOT for production use. Here is why.” by Alden D. (Discussion of gaps in current frameworks like LangChain around transparency, maintenance, and cost) medium.com.

Medium – “What Every AI Engineer Should Know About A2A, MCP & ACP” by Edwin Lisowski (Emerging standards for agent communication and interoperability, e.g. Google’s A2A protocol) medium.com.

Ridgeant Blog – “Agentic AI Cross-Functional Intelligence” (Definition of agentic AI and its role in breaking down data silos in enterprises) ridgeant.com.